Voice Signatures Recipes

Overview

Identifying the same speaker across different audio samples can be done using the DeepTone™ SDK. Checkout the Speaker Detection Recipes to get familiar with the Speaker Map model.

In this Section we will look at the following use cases:

- Create voice signatures of known speakers and detect the same speakers in new unseen audio data - example 1

Pre-requisites

- DeepTone™ with license key and models

- Audio Files to process:

- An audio file that only contains one speaker, very little silence and is not too long (<10 seconds)

- An audio file that contains multiple speakers, including the same speaker as the first file

Recognise speaker - Example 1

To be able to recognise a certain speaker in a file, we need to create a voice signature of the speaker. For this example we need a audio file that only contains one speaker, contains very little silence and is not too long. Something like this example file: Teo

Let's use this short snippet to create a voice signature:

from deeptone import Deeptone

# Initialise Deeptone

engine = Deeptone(license_key="...")

voice_signatures = engine.create_voice_signatures(speaker_samples=[

{"speaker_id": "teo", "audio": "teo.wav"},

])

# The signatures dictionary will contain the key "teo" with the voice signature as value

We can now use this created voice signature to check if the speaker "teo" is talking in a new audio file. Let's use this sample audio: Two Speakers.

output = engine.process_file(

filename="audio_sample_speaker_map.wav",

models=[engine.models.SpeakerMap],

output_period=1024,

include_summary=True,

include_transitions=True,

voice_signatures=voice_signatures,

include_voice_signatures=True

)

# Print the summary to see if the speaker "teo" is talking in the analysed file

print(output["channels"]["0"]["summary"]["speaker-map"])

The summary will look something like this:

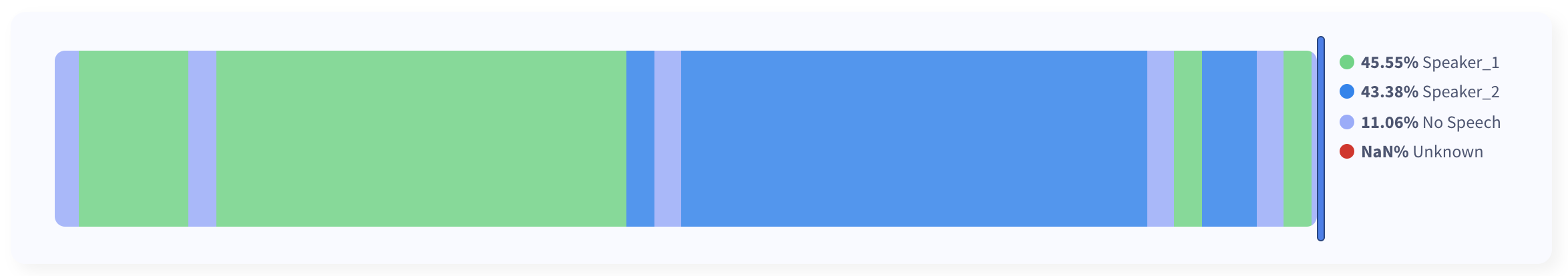

{

"no_speech_fraction": 0.1106,

"teo_fraction": 0.4555,

"speaker_2_fraction": 0.4338,

"speaker_count": 2

}

We see that we found the speaker teo and a new speaker speaker_2. When setting include_voice_signatures to True

the output will contain the updated voice signatures including the newly found speaker_2.

Let's give the new speaker a name:

updated_voice_signatures = output["channels"]["0"]["voice_signatures"]

# Rename newly found speaker

updated_voice_signatures["lourenco"] = updated_voice_signatures.pop("speaker_2")

Now we can process the same file again with the updated voice signatures and check the summary:

output_with_both_voice_signatures = engine.process_file(

filename="audio_sample_speaker_map.wav",

models=[engine.models.SpeakerMap],

output_period=1024,

include_summary=True,

include_transitions=True,

voice_signatures=updated_voice_signatures

)

# Print the summary to check if both speakers are now found

print(output_with_both_voice_signatures["channels"]["0"]["summary"]["speaker-map"])

The summary now looks something like this:

{

"no_speech_fraction": 0.1106,

"teo_fraction": 0.4338,

"lourenco_fraction": 0.4555,

"speaker_count": 2

}

We can see that the fractions for "teo" and "lourenco" changed slightly from before. We can inspect and compare the transitions from both outputs using audacity:

# Save the transitions to a .txt file

with open(f"output_labels.txt", "w") as f:

for transition in output["channels"]["0"]["transitions"]["speaker-map"]:

f.write(f"{transition['timestamp_start'] / 1000.0}\t{transition['timestamp_end'] / 1000.0}\t{transition['result']}\n")

# Save the transitions to a .txt file

with open(f"output_with_both_signatures_labels.txt", "w") as f:

for transition in output_with_both_voice_signatures["channels"]["0"]["transitions"]["speaker-map"]:

f.write(f"{transition['timestamp_start'] / 1000.0}\t{transition['timestamp_end'] / 1000.0}\t{transition['result']}\n")

After executing the script, you will find a output_labels.txt and output_with_both_signatures_labels.txt

in your working directory. You can open the audio file with Audacity and then import the

files as labels, from File -> Import -> Labels ... .

When providing both voice signatures to the process_file function, the predictions are improved compared to when only

providing the voice signature of one of the speakers. This is because the model knows about both of the speakers from

the beginning and is therefore quicker in identifying the speaker "lourenco" when he start talking.

Looking at the transitions of the results we can see the difference: Without providing voice signatures:

When providing the voice signatures of both speakers:

When providing the voice signatures of both speakers: The model is faster in detecting speaker lourenco's turn.

The model is faster in detecting speaker lourenco's turn.